AI and robotics are indeed advancing rapidly, with several groundbreaking developments happening just this week. Let me provide an in-depth analysis of the points you raised:

1. NVIDIA’s Project GR00T:

This foundation model represents a significant step towards endowing robots with a comprehensive understanding of their surroundings and tasks. By leveraging multimodal perception and sensor fusion, Project GR00T enables robots to process and comprehend various types of data, including images, videos, audio, and text, simultaneously. This capability is crucial for robots to navigate complex environments and interact with the world effectively.

The self-supervised learning techniques employed in training Project GR00T allow the model to learn from vast amounts of unlabeled data, providing it with a broader understanding of the world without relying solely on manually labeled data. This approach could significantly reduce the time and resources required to train robots for various applications.

2. NVIDIA Blackwell:

NVIDIA’s Blackwell is a significant achievement in reducing the cost and energy consumption of AI systems. By delivering up to 25 times lower cost and energy consumption compared to an H100, Blackwell could make advanced AI capabilities more accessible and environmentally sustainable for a wider range of applications

3. Neuralink’s BCI and Online Chess:

Neuralink’s brain-computer interface (BCI) enabling a patient to play online chess by thoughts alone is a remarkable milestone in the field of human-computer interaction. The successful implantation and the patient’s positive experience highlight the potential of BCI technology to enhance human capabilities and assist individuals with disabilities.

4. Open Interpreter 01 Light:

The 01 Light is an intriguing development in the realm of open-source voice interfaces. By allowing users to control applications and enable AI to learn skills and observe screens through a portable device, it could pave the way for more seamless and intuitive human-AI interactions in various contexts.

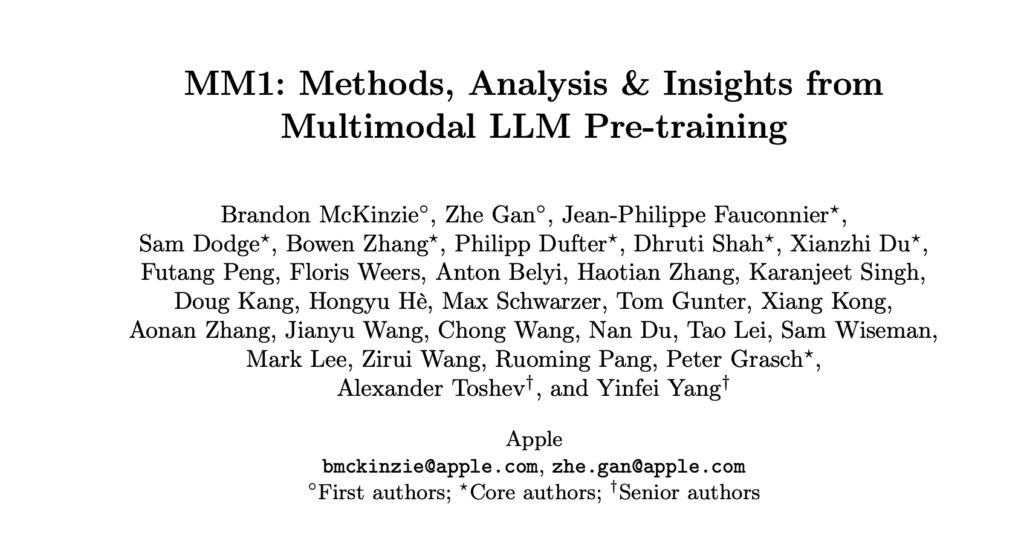

5. Apple’s MM1 Multimodal AI Models:

Apple’s MM1 family of multimodal AI models represents a significant advancement in the field of multimodal learning. The ability of these models to learn from only a handful of examples and reason over multiple images showcases the potential for more efficient and effective AI systems that can adapt to different tasks and scenarios with minimal training data.

6. Gemini Integration into iPhone:

The potential integration of Gemini into the iPhone could be a game-changer, bringing advanced AI capabilities to billions of users. This development could democratize access to cutting-edge AI technologies and enable a wide range of innovative applications and services for mobile users.

7. NVIDIA Earth-2:

NVIDIA’s Earth-2 cloud platform is a promising development in the field of climate change and weather forecasting. By combining AI and digital twin technology, Earth-2 could provide more accurate and reliable predictions, enabling better preparedness and mitigation strategies for extreme weather events.

8. DeepMind’s VLOGGER:

DeepMind’s VLOGGER model is an impressive achievement in the field of AI-generated media. By enabling the generation of talking avatar videos with full upper body motion from just a still image and audio clip, VLOGGER could have a wide range of applications in areas such as virtual assistants, online education, and content creation.

9. xAI’s Grok-1:

The release of the weights and architecture of xAI’s Grok-1 model is a significant contribution to the AI community. With its massive 314B parameters and efficient computation enabled by the Mixture-of-Experts approach, Grok-1 could serve as a powerful foundation for various AI applications and further research in the field of large language models.

10. Stanford’s Quiet-STaR:

The Quiet-STaR training method developed by Stanford researchers is an intriguing approach to improving the reasoning capabilities of AI models. By enabling models to “think” before responding, Quiet-STaR could lead to more coherent and well-reasoned outputs, potentially enhancing the performance of AI systems in various tasks.

11. MindEye2 from Stability AI and Princeton:

The MindEye2 model represents a significant leap in reconstructing images from brain activity. By connecting brain data to an image generation model, MindEye2 can produce photorealistic reconstructions, which could have important implications for various fields, such as neuroscience, brain-computer interfaces, and even creative applications.

12. Yell At Your Robot (YAY Robot):

The YAY Robot method developed by Stanford and UC Berkeley is an innovative approach to improving the performance of robots on long-horizon tasks. By utilizing natural language feedback from humans, this method could enable more effective robot learning and adaptation, leading to better task execution and human-robot collaboration.

13. HumanoidBench from Berkeley AI:

The HumanoidBench benchmark introduced by Berkeley AI is a valuable contribution to the field of humanoid robot control and learning. By providing a standardized platform for evaluating and advancing algorithms in this domain, HumanoidBench could accelerate the development of more capable and intelligent humanoid robots

14. Maisa’s Knowledge Processing Unit:

Maisa’s Knowledge Processing Unit aims to set new standards in reasoning, comprehension, and problem-solving by combining the power of large language models with decoupled reasoning and data processing. This approach could lead to more robust and capable AI systems capable of tackling complex tasks requiring deep understanding and reasoning.

15. Sakana AI’s Japanese AI Models:

The release of Sakana AI’s new Japanese AI models using a novel training method is an interesting development in the field of natural language processing. If this alternative training path proves scalable, it could potentially offer new avenues for improving the performance and capabilities of AI models across various languages and domains.

Overall, these developments highlight the rapid progress being made in AI and robotics, with advancements spanning a wide range of areas, including multimodal perception, human-computer interaction, large language models, reasoning capabilities, and application-specific domains such as climate change, neuroscience, and humanoid robotics. The continued innovation and collaboration in these fields hold immense potential for transforming various industries and aspects of our lives.